I recently stumbled upon these awesome distributed system challenges by fly.io. I was pleasantly surprised because I’ve always been on the lookout for interesting dev-related challenges. You know, besides the usual competitive programming stuff, which I don’t really consider all that dev-focused.

I think every backend engineer must give these challenges a shot. They’re not just educational but also a lot of fun. Plus, they really propel you forward in your developer journey.

Before diving into these challenges, I had some experience with Golang, but my knowledge of distributed systems was pretty basic. I knew about the CAP Theorem, but if you asked me what each letter stood for, I might have guessed “C” for consistency, “A” for availability, and “P” for… maybe permanence? 😄

So, if you’re a beginner too, don’t get intimidated by the challenges. This is going to be a fun and detailed walkthrough of all the challenges, approaches I took to solve them including the local setup and documentation navigation.

Note: If you are familiar with the problems, and looking for solutions, you can skip this article and head to the solutions directly:

Challenge 2

Challenge 3a | Challenge 3b&3c | Challenge 3d | Challenge 3e

Challenge 4

Understanding the architecture

Before we dive into setting up our system, let’s understand how do you solve the challenges and evaluate your solutions. Since, it is completely different from cp challenges, it is critical to understand the tools provided by fly.io team to test our distributed systems.

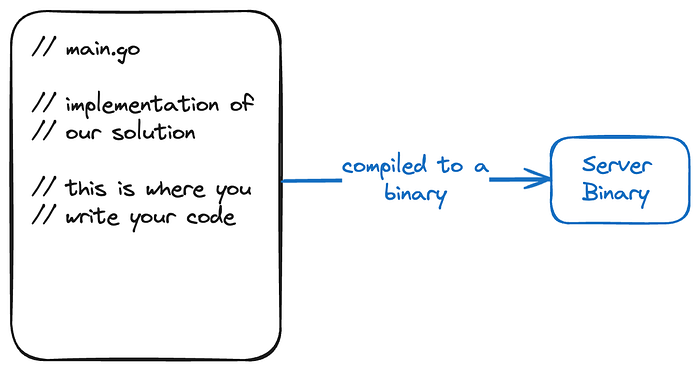

Your code is compiled to a binary

Maelstrom binary deploys a distributed system using your compiled binary and a set of clients

The above diagrams is pretty self-explanatory. We download the Maelstrom Binary (a tool) from their website which deploys our code as a server node in the distributed system.

Hence, we need to implement the node servers with the following assumptions of a generic distributed system:

- Clients might make concurrent calls on a single node, i.e. a node might be handling multiple clients at the same time in as many go-routines.

- Concurrent calls might be distributed among the node servers.

- There is no client-server mapping, any client’s request might be served by any server-node.

- More about communication between nodes later.

Setting up the playground

1. Download the Maelstrom Binary

a. Install Deps:

Maelstrom is built in Clojure so you’ll need to install OpenJDK. It also provides some plotting and graphing utilities which rely on Graphviz & gnuplot. If you’re using Homebrew, you can install these with this command:

brew install openjdk graphviz gnuplot

You can find more details on the Prerequisites section on the Maelstrom docs.

b. Install the binary:

You can find the binary here: Maelstrom 0.2.3

2. Setting up your local code

mkdir gossip-gloomers

cd gossip-gloomers

mkdir maelstrom-echo

cd maelstrom-echo

touch main.go

go mod init maelstrom-echo

go mod tidy

Now you can start coding in main.go

The first challenge of maelstrom is a walkthrough challenge in itself to explain the setup. We will follow the same procedure

So you can follow the instructions below:

Copy the following file in main.go

package mainimport (

"encoding/json"

"log"

"os" maelstrom "github.com/jepsen-io/maelstrom/demo/go"

)func main() {

n := maelstrom.NewNode()

n.Handle("echo", func(msg maelstrom.Message) error {

var body map[string]any

if err := json.Unmarshal(msg.Body, &body); err != nil {

return err

}

body["type"] = "echo_ok"

return n.Reply(msg, body)

})

if err := n.Run(); err != nil {

log.Printf("ERROR: %s", err)

os.Exit(1)

}

}

Now run the following commands:

# make sure GOPATH is set and added to your PATH env

go mod tidy && go mod vendor

go install .# you should be able to access this cmd

maelstrom-echo

Using go install . , you compiled your main.go to a binary. Then you can use the maelstrom binary (downloaded in Step 1), to test your compiled binary.

/path/to/downloaded/maelstrom/binary test -w echo --bin /path/to/compiled/maelstrom-echo --node-count 1 --time-limit 10

Breaking down the command:

/path/to/downloaded/maelstrom/binary— The path to the maelstrom binary you downloadedtest— the testing command name-w echo— the workload argument. This tells the binary, which workload (challenge) you are testing for--bin /path/to/compiled/maelstrom-echo— the path to your compiled binary. This is the code (binary) to be tested--node-count=1— The number of nodes (server) to be deployed in the distributed system to be tested.--time-limit— The time for which the system is tested

There are more available arguments, but we would get to know them later. For now make sure you get an idea of what we’re trying to do.

Now that you have a little understanding, let’s go through the code in main.go

import maelstrom "github.com/jepsen-io/maelstrom/demo/go"

We import the maelstrom golang library which helps us define the node, and define the handlers for handling requests from the clients. We can think of these handlers as normal http requests.

n := maelstrom.NewNode()

Here, we define n as the node. Now we can define the handlers on this node object using n.Handle()

n.Handle("echo", func(msg maelstrom.Message) error {

var body map[string]any

if err := json.Unmarshal(msg.Body, &body); err != nil {

return err

}

body["type"] = "echo_ok"

return n.Reply(msg, body)

})

n.Handle() takes in the first parameter as a string. This is what maelstrom calls RPC . In simpler terms, you can think of this as a the router value (like /ping)in a http call. This is the identifier to map a function to the request type.

The second parameter is the handler function. It provides the request message by the object msg of the type maelstrom.Message . This object generally looks like:

{

"src": "c1",

"dest": "n1",

"body": {

"type": "echo",

"msg_id": 1,

"echo": "Please echo 35"

}

}

src : c1refers to client with id c1

dest : n1 refers to node with id n1

The body contains other information (like POST data). It will always contain the field type which is equal to the RPC value.

We unmarshal msg.Body into map[string]any . The problem (Challenge 1) states that the node only needs to return echo_ok as the response to the request. So we change body[type]="echo_ok" and send a message back to the client using n.Reply()

You can send message to any nodes/clients in the cluster using

n.Send,n.RPCorn.SyncRPCby specifying the address. You don’t need to specify address when usingn.Replysince it sends back the response to the requester.

if err := n.Run(); err != nil {

log.Printf("ERROR: %s", err)

os.Exit(1)

}

And finally we run the node service using n.Run() at the end of the main function. This is similar to running a http server. This is a blocking command and runs until a fatal error.

You can view the entire source code here

The objective of this article was to make you comfortable with the stack. We haven’t even started solving the challenges yet. We will cover each challenge in a separate article, so that I can cover each of them in detail.

Hope you guys found it interesting. I would really recommend you to create a new directory gossip-gloomers/maelstrom-unique-ids and try to solve Challenge 2.

The real fun is in trying yourself and scratching your heads all over it. Then feel free to jump on to the next article if you are stuck for a while (atleast a day or 2), or even if you solved it (just in case).

Hope this was worth your time :)

Here is the link to the detailed walkthrough of Challenge2